Live sports are a universal language—but actual sports commentary is not. Official broadcasts typically offer one or two language options, leaving millions of fans without commentary in their native tongue. And beyond language, fans often want something different: a hometown perspective, a tactical analysis, a comedic take, or simply the voice of someone who shares their passion for a local club.

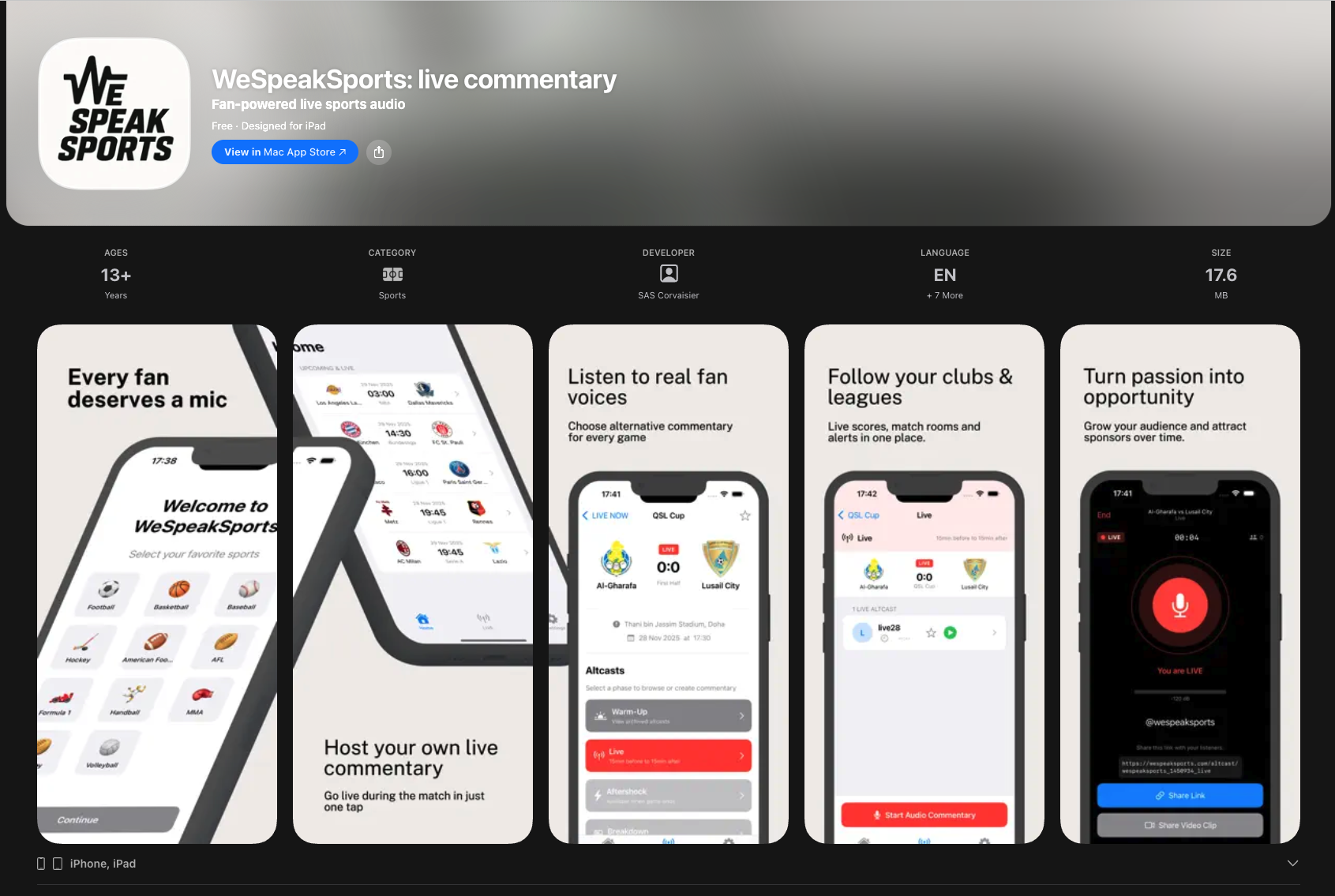

WeSpeakSports was built to unlock this potential. The platform enables fans worldwide to become altcasters—alternative broadcasters providing real-time, user-generated audio commentary in any language for any sporting event. This practice, known as altcasting, transforms passive viewers into active creators. Imagine watching your favorite team with commentary from a fellow supporter in your city, or following a foreign league with analysis in your native language. That is the vision: democratizing sports commentary through multi-language UGC (User-Generated Content).

But there is a catch. When fans provide live audio commentary, they face a unique technical challenge. Traditional streaming platforms introduce delays of 5 to 30 seconds—an eternity in sports where a single goal, touchdown, or buzzer-beater can change everything.

Imagine this scenario: a commentator reacts to a goal before viewers see it on their screens, spoiling the moment. Or worse, the viewer watches the goal and waits five seconds for the commentator to react, creating a frustrating disconnect. Either way, the user experience is fundamentally broken.

The Core Problem: Traditional streaming latency makes real-time sports commentary impossible. Commentary must synchronize with live action, or listeners will simply switch to alternatives.

The solution? A dual WebRTC architecture combining Ant Media Server and MediaSoup for maximum reliability and minimal latency. This infrastructure powers both live commentary streams and opens the door for future features like archived commentary—allowing fans to revisit historic matches with alternative audio tracks long after the final whistle.

Latency Comparison: WebRTC vs Traditional Streaming

Before diving into the technical implementation, let us understand why WebRTC was the only viable choice for this use case:

| Protocol | Typical Latency | Use Case |

|---|---|---|

| HLS (HTTP Live Streaming) | 6–30 seconds | VOD, large-scale broadcasts |

| DASH | 4–15 seconds | Adaptive streaming |

| RTMP | 2–5 seconds | Live streaming ingest |

| WebRTC (WeSpeakSports) | < 500ms | Real-time communication |

WebRTC delivers latency that is 10 to 60 times lower than traditional streaming protocols. This is not just an incremental improvement—it is the difference between a synchronized experience and a broken one.

Architecture Overview

WeSpeakSports implements a dual-platform architecture that prioritizes both low latency and reliability. The infrastructure combines two powerful WebRTC servers running in parallel: Ant Media Server (AMS) serves as the primary platform, while MediaSoup provides independent redundancy. Both platforms are full-featured WebRTC implementations—MediaSoup is not just a fallback, it is a complete parallel streaming path.

┌─────────────────────────────────────────────────────┐ │ WeSpeakSports Platform │ ├─────────────────────────────────────────────────────┤ │ Web App (React/TypeScript) + iOS Native (Swift) │ │ │ │ ┌─────────────────┐ ┌─────────────────────┐ │ │ │ Platform 1 │ │ Platform 2 │ │ │ │ Ant Media Server│ │ MediaSoup SFU │ │ │ │ WSS + WebRTC │ │ Socket.IO + WebRTC │ │ │ └────────┬────────┘ └──────────┬──────────┘ │ │ │ Parallel Streaming │ │ │ └──────────┬──────────────┘ │ │ ▼ │ │ ┌─────────────────────────────────────────────┐ │ │ │ STUN Servers (Multiple for redundancy) │ │ │ └─────────────────────────────────────────────┘ │ └─────────────────────────────────────────────────────┘

Both platforms initialize simultaneously using Promise.allSettled(), ensuring that redundancy adds zero latency to the primary stream. If AMS experiences issues, MediaSoup is already running and ready for instant failover.

Key Performance Metrics

- <500ms End-to-End Latency

- 11 Sports Categories

- 8 Commentary Languages

- 20,000+ Clubs in Database

The Four Commentary Phases

WeSpeakSports recognizes that sports engagement does not start at kickoff and end at the final whistle. The platform supports four distinct commentary phases:

| Phase | Timing | Altcast Content |

|---|---|---|

| Warm-up | Before the game | Pre-match analysis, lineup reactions, predictions |

| Live | During the game | Real-time play-by-play commentary (sub-500ms critical) |

| Aftershock | Just after the game | Immediate reactions, emotional takes, hot takes |

| Breakdown | The next day | Tactical analysis, detailed breakdowns with replays |

A Note on Stadium Altcasting: When an altcaster broadcasts directly from the stadium, the ultra-low latency architecture can actually work too well. Since the altcaster sees the action live with zero delay, while TV viewers experience a 3-8 second broadcast delay, the audio commentary may arrive before the video.

Audio Optimization: Where Milliseconds Are Won and Lost

The most impactful optimizations happen at the audio layer. Default browser audio constraints add significant processing latency that is not acceptable for real-time sports commentary.

Production Audio Configuration

// Ultra-low latency audio constraints

const audioConstraints = {

audio: {

echoCancellation: false, // Saves ~20ms

noiseSuppression: false, // Saves ~15ms

autoGainControl: false, // Saves ~5ms

sampleRate: 48000, // Professional quality

channelCount: 1, // Mono = 50% less data

bitrate: 48000 // Optimized for voice

}

};

| Setting | Value | Latency Saved | Rationale |

|---|---|---|---|

echoCancellation | false | ~20ms | Eliminates DSP delay and prevents flange artifacts |

noiseSuppression | false | ~15ms | Removes processing that causes robotic sound |

autoGainControl | false | ~5ms | Manual Web Audio API control (~2ms) instead |

channelCount | 1 (mono) | 50% encoding | Half the data to encode and transmit |

bitrate | 48 kbps | Minimal | Voice does not need music-quality bitrates |

Total Latency Reduction: These audio settings alone save 40–50ms compared to default browser constraints. For a target of sub-500ms end-to-end latency, that is nearly 10% of the budget.

WebSocket Signaling with Aggressive Keep-Alive

Standard HTTP keep-alive intervals of 30 seconds are far too slow for real-time applications. WeSpeakSports implements a 3-second heartbeat:

// 3-second WebSocket heartbeat

this.pingTimerId = setInterval(() => {

const jsCmd = { command: "ping" };

this.wsConn.send(JSON.stringify(jsCmd));

}, 3000);

ICE Configuration for Optimal Connectivity

NAT traversal is critical for WebRTC connectivity. WeSpeakSports uses multiple redundant STUN servers:

// Multiple STUN servers for redundancy

iceServers: [

{ urls: stun:stun1.example.com:19302 },

{ urls: stun:stun2.example.com:19302 },

// ... additional STUN servers

]

// ICE candidate filtering - only UDP and TCP

this.candidateTypes = ["udp", "tcp"];

Dual-Platform Architecture: Ant Media Server + MediaSoup

Ant Media Server provides battle-tested WebRTC infrastructure with WebSocket signaling. It handles the primary distribution with proven scalability.

MediaSoup is a Node.js-based SFU (Selective Forwarding Unit) using Socket.IO transport. It offers fine-grained control over media routing.

// Parallel initialization - both start simultaneously Promise.allSettled([ initializeAMSPublisher(), // Platform 1: Ant Media Server initializeMediaSoupPublisher() // Platform 2: MediaSoup ]);

Independent Reconnection Strategies

| Parameter | Ant Media Server | MediaSoup |

|---|---|---|

| Max Retries | 5 | 3 |

| Initial Delay | 2,000ms | 1,000ms |

| Max Delay | 30,000ms | 15,000ms |

| Backoff Multiplier | 2x (exponential) | 1.5x (gentler) |

Real-World Performance: The Latency Breakdown

| Stage | Latency | Notes |

|---|---|---|

| Microphone capture | ~10ms | Hardware dependent |

| Audio encoding (Opus) | <5ms | 48kbps mono, hardware accelerated |

| Network transmission | 50–100ms | Varies by connection quality |

| Server processing (AMS) | <10ms | Minimal processing overhead |

| Network to listener | 50–100ms | Varies by connection quality |

| Decoding & playback | ~10ms | Hardware dependent |

| Total (Glass-to-Glass) | <500ms | Target achieved |

Lessons Learned

1. Raw Audio Wins for Real-Time

Initial testing with echo cancellation and noise suppression enabled resulted in 40–50ms additional latency and robotic audio artifacts. For sports commentary, natural audio with low latency beats processed audio every time.

2. Parallel Platforms Beat Primary/Backup

Running Ant Media Server and MediaSoup simultaneously—not as primary/backup but as parallel streams—means true redundancy. Both are always live.

3. Aggressive Heartbeats Are Essential

Moving from 30-second to 3-second WebSocket pings transformed failure detection.

4. Voice Does Not Need Music Quality

48 kbps is plenty for voice commentary. Higher bitrates added overhead without perceptible quality improvement.

Beyond Live: The Altcasting Revolution

While ultra-low latency is the technical foundation, the real innovation is what it enables: a global community of altcasters creating content in their own languages, for their own communities.

Consider the possibilities. A Brazilian altcaster in Tokyo providing Portuguese commentary for a Flamengo match. A French tactician breaking down PSG formations for francophone fans in Africa. A Spanish-speaking altcaster in Los Angeles calling MLS games for the Latino community.

Instant Clip Sharing: The Last 30 Seconds

WeSpeakSports includes a clip feature that lets altcasters instantly capture the last 30 seconds of their commentary and share it directly to social networks. When a spectacular goal happens, the altcaster hits one button and their reaction is ready to post to Twitter, Instagram, or TikTok within seconds.

Conclusion

Ultra-low latency WebRTC streaming is not only achievable—it is production-ready. WeSpeakSports demonstrates that with aggressive audio optimization, robust infrastructure (Ant Media Server + MediaSoup running in parallel), and thoughtful architecture decisions, sub-500ms latency is within reach for any real-time application.

For sports fans who want to share their passion as altcasters, milliseconds matter. Every optimization in this stack serves a single goal: ensuring that when the ball hits the net, the altcaster reaction reaches listeners before they can blink.

The WeSpeakSports platform was built by experienced freelancers available through iReplay.tv. Whether you need WebRTC expertise, Ant Media Server integration, MediaSoup development, or end-to-end streaming architecture—our network of specialists can bring your real-time project to life. Hire a Professional at iReplay.tv